Batch Video Processing

How a serverless video processing works for a video analytics

In the era of content-driven digital platforms, a lot of bloggers use massively batch video processing. The efficiently is crucial, the amount of work is huge. I recently undertook a project to build a robust and scalable serverless architecture designed to automate the retrieval, processing, and storage of video data. This project leverages AWS services to ensure high availability, fault tolerance, and efficiency in handling video-related data operations.

Initial project objectives

The goal was to eliminate manual intervention in video processing tasks and replace it with an automated, scalable solution. The architecture needed to be fault-tolerant, ensure data integrity, and support high throughput.

Best practices implemented

This project follows several AWS and DevOps best practices, focusing on security, scalability, and efficiency. Below is a breakdown of key decisions made during the development:

- Infrastructure as Code (IaC): By using AWS Serverless Application Model (SAM), all resources are defined as code, ensuring consistent and repeatable deployments. SAM made it easy to deploy Lambda functions, S3 buckets, SQS queues, and other resources automatically.

- Event-driven Architecture: AWS Lambda functions are triggered by specific events, such as the arrival of new videos or metadata changes. This event-driven model maximizes resource efficiency by running functions only when needed.

- Dead Letter Queues (DLQs): To ensure no messages are lost, DLQs capture failed messages, allowing for later reprocessing. This enhances data integrity by ensuring every video and metadata are processed accurately.

- Replay Mechanism: An additional replay mechanism was implemented to guarantee 100% message delivery and zero loss, even during high throughput or when unexpected issues arise. This increases the reliability of the overall architecture.

- Enhanced Security: Credentials were moved from environment variables to AWS Secrets Manager, following AWS best security practices. This ensures that sensitive data, such as API keys, remains secure and is accessed only by authorized services.

- Principle of Least Privilege: All AWS resources are configured to follow the principle of least privilege, ensuring that each service only has the necessary permissions to function. This significantly enhances the security posture of the system.

- Monitoring & Auditing: Every component, from Lambda functions to SQS queues, is monitored using CloudWatch Logs with a defined retention period. This ensures that issues can be identified quickly, and detailed logs are available for auditing and performance reviews.

Target solution and architecture

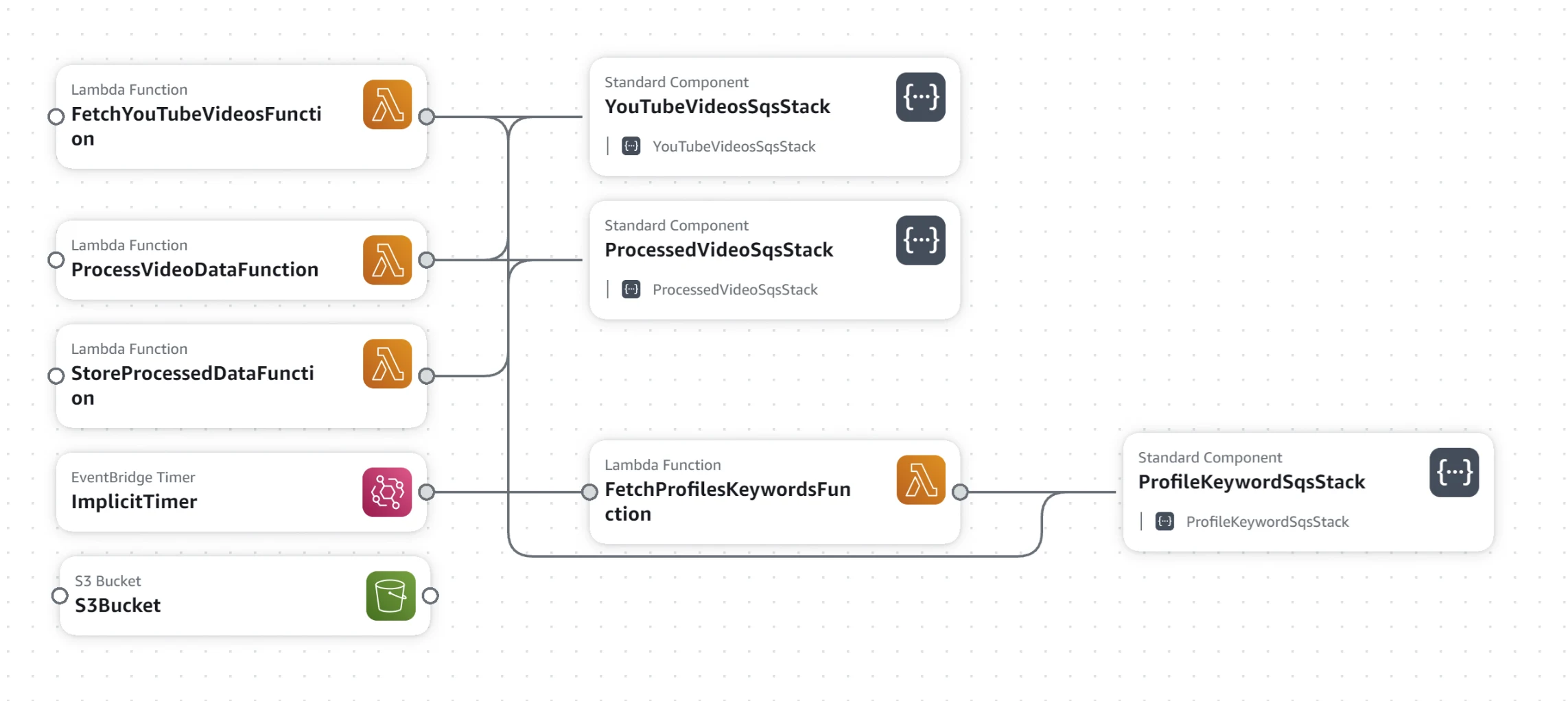

The target solution was built to automate the entire process—from video retrieval to storage—allowing for seamless scalability. The architecture leverages AWS Lambda for compute tasks, S3 for storage, and SQS for messaging. Below is a high-level overview of the architectural components:

Figure 1: AWS Video Processing Architecture

Figure 1: AWS Video Processing Architecture

The architecture comprises several key AWS components:

- Lambda Functions: Used for processing and storing video data. Functions are triggered automatically when new videos arrive.

- S3 Buckets: Act as the primary storage for processed videos and their metadata.

- SQS Queues: Responsible for message queuing between different stages of the processing workflow, ensuring smooth data flow.

- EventBridge: Triggers workflows based on scheduled timers for periodic tasks.

One of the key highlights of this project was the development of automated workflows to handle all aspects of video processing. From fetching new video data to processing and storing it, everything is managed without manual intervention.

The above image shows the automation workflow in action. Key steps include:

- Fetching videos from a YouTube API

- Processing video metadata for storage in S3

- Keyword extraction from videos for SEO purposes

- Storing the processed data in a structured format for further use

Results and summary

By following AWS best practices and automating all critical workflows, this project delivered several key benefits:

- Scalability: The system automatically scales to handle any volume of video data, ensuring no bottlenecks in processing.

- Reliability: With DLQs, replay mechanisms, and automated monitoring, the system guarantees high data integrity and uptime.

- Security: Best practices such as using AWS Secrets Manager and the principle of least privilege ensure robust security at every level.

- Efficiency: Automated workflows reduce manual effort and ensure faster processing, making the system highly efficient and cost-effective.

This serverless video processing solution represents a modern approach to handling video data at scale, making it an ideal architecture for businesses looking to streamline their video operations. By addressing key areas like automation, security, and scalability, this project offers a future-proof solution that adapts to growing data needs.