Silk Mobile Back-End

Back-end cloud solution for a Georgian bank.

The bank aimed to redefine its banking services with a comprehensive solution to transform its banking services digitally. This included loan origination, onboarding, payments, and real-time integration with local data providers. The primary goal was to improve operational efficiency, enhance the customer experience, and maintain compliance with regulatory requirements.

The scope of the project

- Application Processing: Digital submission, document collection, and verification of loan applications.

- Credit Scoring and Decisioning: Automated scoring models, integrations with credit information services, and Revenue Service APIs.

- Compliance & Reporting: Automated regulatory checks and reports adhering to local and international standards.

- Security and Data Privacy: Secure handling of sensitive customer data, ensuring trust and compliance.

- System Integrations: Seamless integration with Core Banking Systems (CBS), CRM tools, and third-party APIs.

- Testing and Quality Assurance: Functional, unit, integration, and end-to-end testing to ensure reliability.

- Infrastructure Setup: Robust, scalable architecture with high availability and disaster recovery features.

With a clear understanding of the project's scope, the architecture needed to balance flexibility and efficiency. To achieve this, the serverless module was chosen as a foundational component, enabling the automation of processes while minimizing operational overhead.

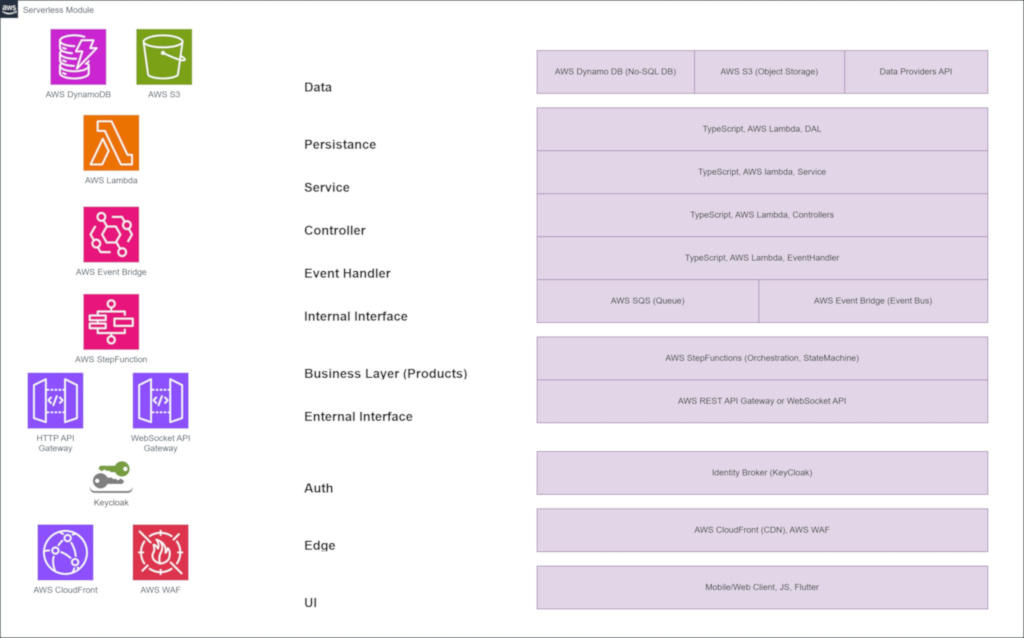

Serverless module

Serverless Module Schema

Serverless Module Schema

The serverless module architecture was designed to streamline application processing and integrations. Core serverless components like AWS Lambda, DynamoDB, and EventBridge were utilized to provide scalability, resilience, and seamless event-driven workflows. AWS Step Functions managed orchestration, ensuring smooth transitions between various services. This approach minimized infrastructure management and allowed the bank to focus on delivering enhanced customer experiences.

While the serverless module provided agility and seamless integration, certain components, such as scoring engines and decisioning systems, required a more traditional approach. This is where the N-tier architecture came into play, offering the reliability and performance necessary for these critical systems.

N-Tier module

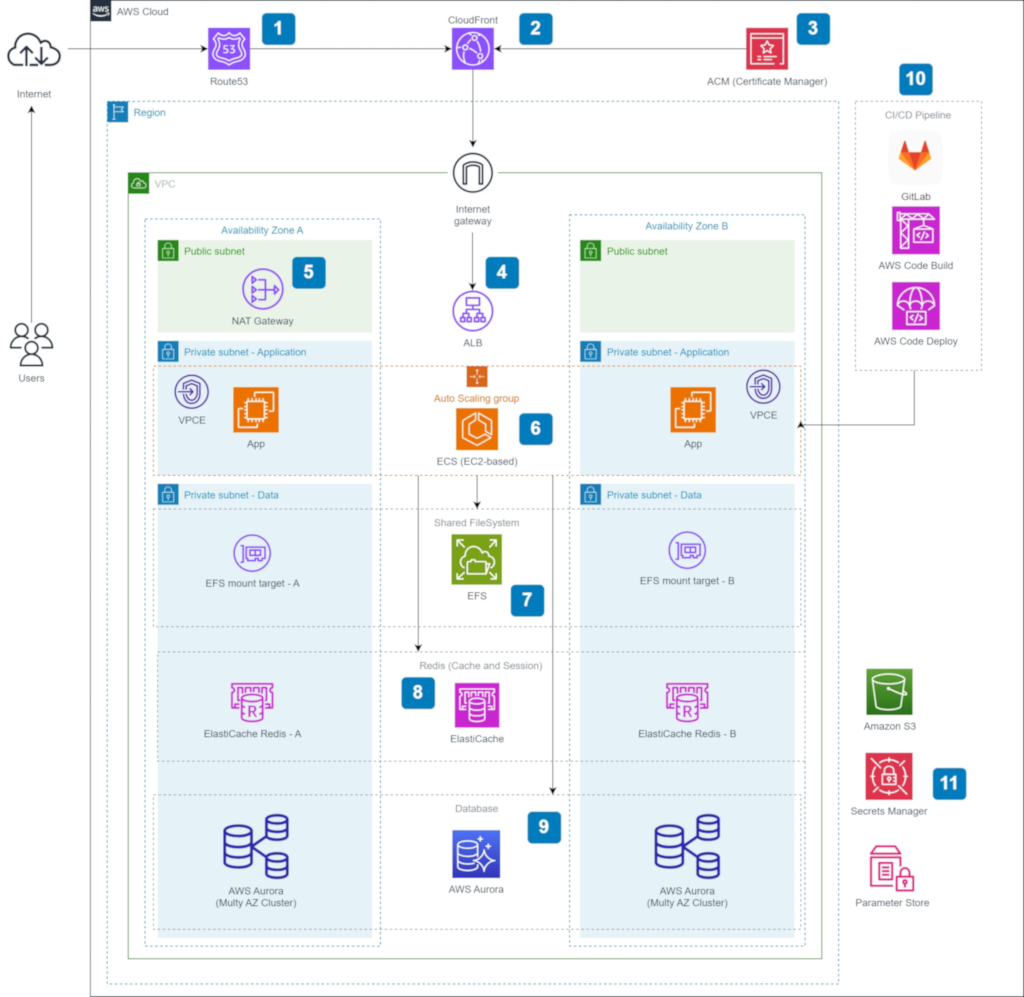

N-tier Module Schema

N-tier Module Schema

The scoring engines and decisioning systems were deployed using a traditional N-tier architecture. With Route 53 for DNS resolution and CloudFront for caching and content delivery, the architecture ensured low-latency access. Application Load Balancers distributed traffic efficiently, while auto-scaling ECS clusters maintained performance under varying loads. AWS RDS was employed for data storage, complemented by ElastiCache for session management and caching. This architecture ensured reliability, security, and optimal performance.

- AWS Route53: Provides scalable DNS resolution, directing user requests to the nearest AWS CloudFront edge location to reduce latency and improve user experience.

- AWS CloudFront: Acts as a content delivery network, caching static and dynamic content at AWS edge locations to ensure low-latency access to the application layer.

- AWS Certificate Manager: Ensures secure communication by managing and provisioning SSL/TLS certificates for encrypted data transfer.

- Application Load Balancer: Distributes incoming traffic efficiently across application nodes to maintain high availability and prevent bottlenecks.

- NAT Gateways: Provide secure outbound internet access for resources within private VPC subnets, maintaining isolation while enabling communication.

- Auto-Scaling with ECS: The application is deployed using an Auto-Scaling group backed by Elastic Compute Cloud (EC2) instances, ensuring dynamic scaling to handle varying workloads.

- Amazon EFS: Used as a shared file system for storing metadata and files that need to be accessed across multiple application nodes.

- AWS ElastiCache for Redis: Provides a high-performance caching solution, enabling shared sessions and reducing database load.

- AWS RDS: A managed MySQL-compatible database service that ensures reliability, scalability, and automated backups for critical data storage.

- CI/CD Pipeline: Configured with automated build and deployment steps for seamless integration into AWS infrastructure. Docker containers are created during the build phase, and AMI images can be managed separately for deployment.

- Monitoring and Security Tools: Continuous integration of tools like OWASP, SonarQube, and AWS Security Hub helps identify vulnerabilities, automate security scans, and ensure compliance with best practices.

As the architectural layers came together, ensuring security and efficiency across the entire development lifecycle became quite important. The DevSecOps pipeline was implemented to seamlessly integrate security into every stage of the SDLC.

DevSecOps pipeline

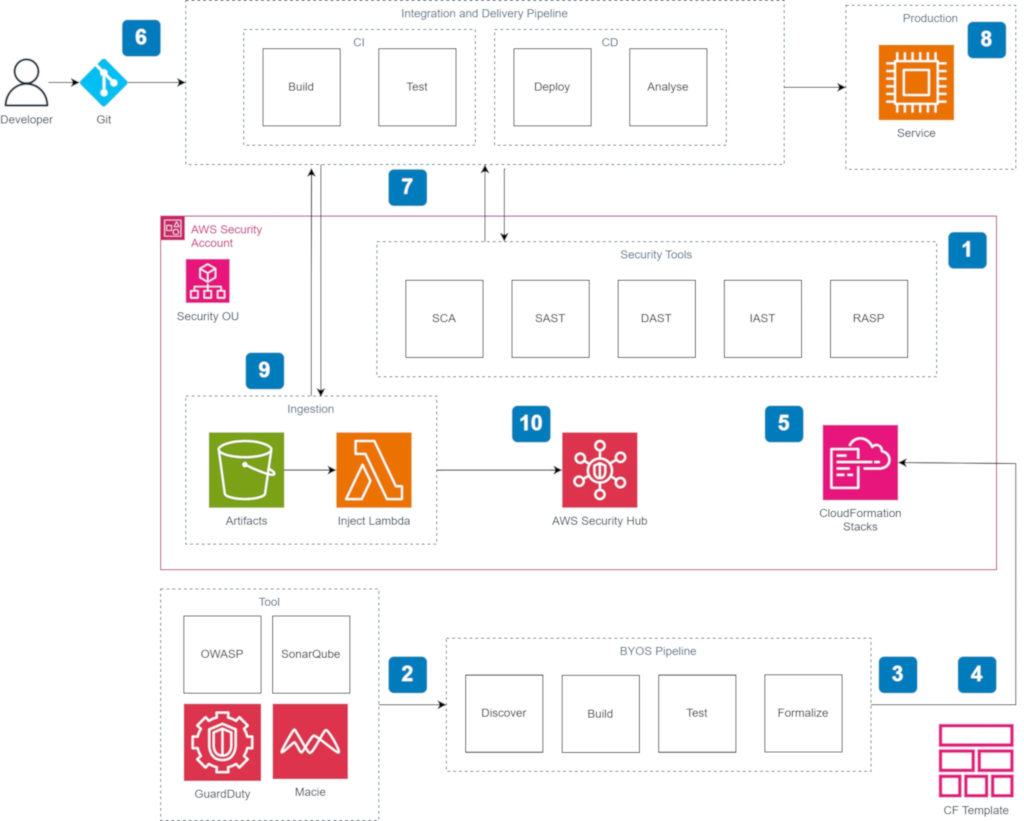

DevSecOps Module Schema

DevSecOps Module Schema

A robust DevSecOps pipeline was implemented to ensure seamless integration of security checks into the CI/CD process. This approach prioritized automation, real-time monitoring, and proactive mitigation of vulnerabilities, enabling secure and efficient deployments.

To complement the DevSecOps pipeline, robust testing and quality assurance processes were embedded into the software lifecycle, ensuring reliability at every step.

Testing and quality assurance

Testing and quality assurance (QA) processes were seamlessly integrated into the software development lifecycle (SDLC) to maintain high standards of reliability and security.

- Test cases are partly generated using AI, reviewed by the development team, and approved by the QA lead.

- Automated mapping with a Test Management System ensures streamlined test coverage tracking and reporting.

- Comprehensive regression testing and security scans are conducted before each release.

- Live monitoring is enabled with dashboards, alarms, and notifications to detect and resolve issues proactively.

By combining cutting-edge tools, automated processes, and continuous monitoring, the DevSecOps pipeline provides a secure, efficient, and resilient foundation for software delivery.

For the Infrastructure Setup AWS CloudFormation and AWS SAM has been used.

Testing and quality assurance ensured the software met high standards of functionality and resilience. A disaster recovery plan ensured the system could withstand disruptions while maintaining business continuity.

Disaster recovery plan (DR)

Disaster recovery is essential to maintain business continuity and protect workloads against unforeseen failures or disasters. Our approach employs two key strategies tailored for different priorities: Backup and Restore for low-priority workloads and Zero-Downtime recovery for mission-critical applications.

| Strategy | Description | RPO/RTO | Cost | Best For |

|---|---|---|---|---|

| Backup and Restore | This strategy mitigates data loss or corruption by relying on scheduled automated backups. It is suitable for workloads where occasional downtime is acceptable. Backups can be restored to bring the system back online after an incident, ensuring data integrity and availability. | Hours | $ | Low-priority workloads |

| Zero-Downtime Recovery | This high-availability strategy involves deploying workloads across multiple regions simultaneously. Traffic is served from all active regions, ensuring seamless access for users. While complex and costly, it reduces recovery time to near zero. For data corruption, backups act as a fallback to recover a consistent state. | Real-time | $$$$ | Mission-critical workloads |

The combination of these strategies ensures that all workloads—whether low-priority or mission-critical—are safeguarded against disasters.

Beyond recovery, the focus extended to ensuring uninterrupted service through high availability, scalability, and resilience. By integrating advanced deployment strategies and elastic scaling, the architecture was designed to proactively handle varying loads and potential disruptions.

High-availability, scalability, and resilience

Ensuring high availability, scalability, and resilience is critical for maintaining robust cloud applications. By implementing a combination of deployment strategies, elastic scaling mechanisms, and fault tolerance, we ensure uninterrupted service even under heavy loads or during failures.

| Feature | Description |

|---|---|

| Versioning and Blue-Green Deployment | Workloads use versioning to maintain a history of code deployments and configurations. With Lambda function aliases, we enable blue-green and rolling deployments, minimizing downtime and reducing deployment risks. |

| Scaling and Elasticity | Workloads automatically scale based on demand, handling thousands of concurrent requests. Elastic scaling ensures additional instances are launched when required, with quotas adjustable to tens of thousands if necessary. |

| High Availability | Applications run across multiple availability zones, ensuring service continuity even if a single zone experiences a failure. This guarantees consistent processing of events. |

| Reserved Concurrency | Reserved concurrency ensures workloads are pre-warmed and always ready to handle a defined level of traffic. This eliminates cold starts for critical applications. |

| Retries and Replays | Predefined retry strategies handle errors with delays between attempts. Queued messages support automatic replay, with escalations and alerts triggered if retries fail. |

| Dead-Letter Queues (DLQ) and Re-Drive Queues | Runtime errors are captured in DLQs for inspection and resolution. Re-drive queues enable automatic retries with exponential back-off mechanisms to prevent cascading failures. |

| Message Bus Archives | A resilient message bus archives all communication in real-time. This ensures zero data loss, enabling messages to be replayed in the event of runtime errors or system failures. |

A system built for resilience must also deliver consistent performance. The performance optimization plan focused on minimizing latency, maximizing resource utilization, and maintaining responsiveness, ensuring the solution could adapt to real-world demands.

Performance optimization plan (PO)

By leveraging advanced techniques and tools, we enhance responsiveness, reduce latency, and optimize resource utilization.

| Optimization Technique | Description |

|---|---|

| Tree-Shaking Strategy | We use esbuild to eliminate unused imports and code, reducing deployment package sizes and improving efficiency. |

| Package Compression | All packages are compressed to reduce warm-up time and improve cold-start performance. |

| Async Processing | Blocking operations are replaced with asynchronous counterparts, fully utilizing non-blocking event loops for faster execution. |

| Memory and CPU Tuning | Memory allocation and CPU usage are fine-tuned to balance execution speed and cost. |

| Provisioned and Reserved Concurrency | Critical functions use provisioned and reserved concurrency to prevent throttling and ensure responsiveness during high traffic. |

| Timeout Configuration | Timeouts are carefully configured to avoid unnecessary resource locking and optimize execution durations. |

| Layer Management | Shared dependencies, runtime binaries, and custom configurations are packaged into layers to improve reusability and reduce deployment size. |

| Batch Processing | Batch size and batch window configurations minimize invocations and maximize resource utilization for event-driven workloads. |

| Efficient Data Fetching | Workloads employ batch reads/writes to reduce database calls and improve query performance. |

| Cache Management | In-memory caching solutions, such as ElastiCache and DAX, are used to enhance performance for frequently accessed data. |

| Payload Compression | Large payloads are compressed to reduce costs and improve latency for API Gateway and SNS services. |

| Distributed Tracing | AWS X-Ray provides detailed invocation paths and identifies bottlenecks across distributed services. |

| Parallelism | Parallel processing techniques are employed to reduce execution time for complex operations. |

| Performance Testing | Tools like AWS Lambda Power Tuning are used to experiment with memory and CPU configurations for optimal performance. |

Summary

This project was more than just an upgrade — it was a redefinition of how a banking system can operate. The team was able to deliver a platform that not only meets the bank's operational needs but positions it for future innovation and growth. The journey showcased the importance of aligning technology choices with business objectives, creating a solution that is robust, secure, and adaptable.

Perhaps the most significant takeaway from this project is the power of modern cloud architectures to simplify complexity. The system seamlessly balances scalability, security, and performance, proving that advanced technology can be made accessible and manageable.